PluralSight – Introduction To Reinforcement Learning From Human Feedback RLHF 2025

English | Tutorial | Size: 44.71 MB

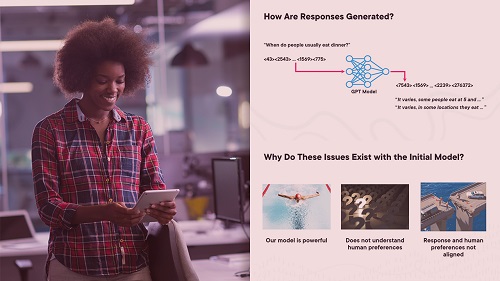

You’ve been hired as an AI Engineer at Globomantics to fix a misaligned LLM. This course will teach you how to use RLHF to design feedback systems and develop value-based alignment strategies for responsible AI deployment.